Security frameworks have always had a gap. They tell you to find vulnerabilities and fix them, but they’ve rarely provided a system to determine which ones actually matter before you tap into your most expensive resource: engineering time.

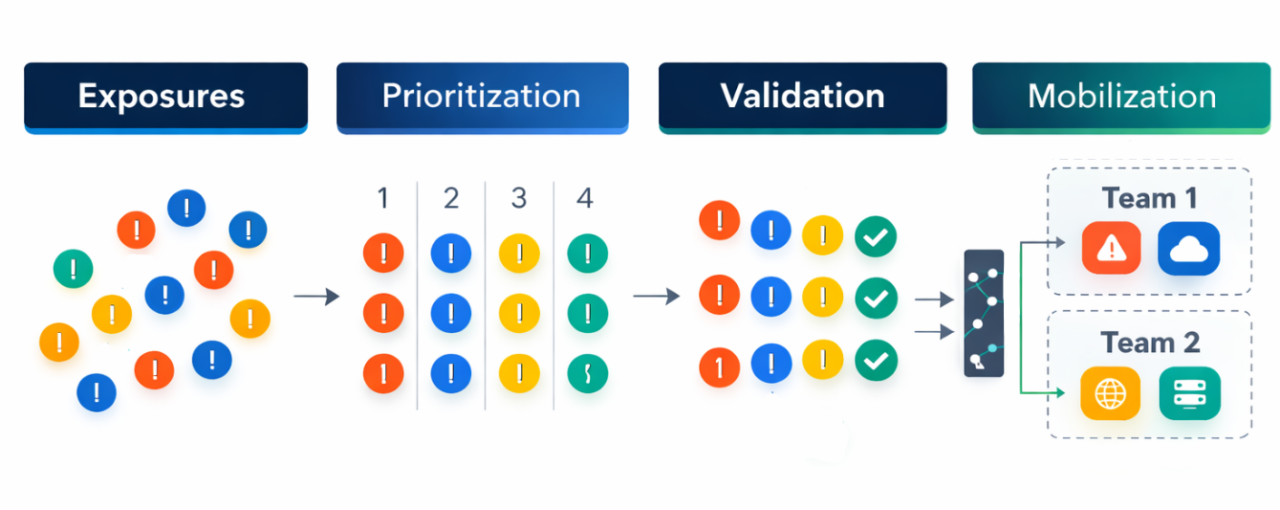

CTEM changes the game by treating security as a continuous lifecycle rather than a series of silos. Its Validation stage isn't just an isolated step; the CTEM framework defines it as the critical pivot point that connects Discovery and Prioritization to actual Mobilization.

By doing so, CTEM creates a structured, evidence-based bridge between "we found something" and "we need to fix it." It also changes the conversation and refocuses security KPIs from a quantitative element to actual evidence that an attacker can exploit a specific issue on a specific asset, right now.

If you want to learn more about these topics, check out other blogs in this series:

The Noise of "Critical" Severity

Most security tools were built to find CVEs. That's their value proposition. The more issues they surface, the more value they appear to deliver. Bigger numbers create an illusion of coverage while masking actual exposure.

That model made sense when environments were simpler. Most infrastructure was on-prem, relatively static, and the volume of known vulnerabilities was manageable. Finding a CVE meant something, it usually pointed to a real problem on an asset someone knew about.

That world doesn't exist anymore. Organizations operate across sprawling hybrid environments with assets spinning up and changing constantly. The number of published CVEs has exploded. Misconfigurations have become as common as software bugs. And the tools built for that earlier era keep doing what they were designed to do: find more, flag more, score more.

The result is a flood of "critical" findings that aren't actually critical. CVSS measures theoretical impact in isolation of the vulnerability, without accounting for network topology, security controls, or whether an attacker can even reach the asset. A CVSS 9.8 on an internal dev server or protected by a security control such as a WAF gets the same score as one on a customer-facing payment gateway. They're not the same risk. Not even close.

So backlogs grow faster than anyone can address them. Security keeps chasing vulnerabilities, engineering pushes back, and the whole organization spends more effort managing the queue than actually reducing risk. Everyone gets busier but nothing gets safer.

This is the core problem CTEM's Validation stage is designed to solve.

Validation as Permission to Ignore

The word "ignore" makes people uncomfortable in security. But every security team already ignores things, they just do it without a system. They deprioritize based on gut feel, push findings to the bottom of the queue, or let tickets age out quietly. With CTEM, ignoring becomes a deliberate, evidence-based decision. Or as Gartner puts it, "Validation works as a filter to prove which discovered exposures could actually impact the organization."

If an issue can't actually be exploited right now, it gets handled through normal hygiene, patching cycles, infrastructure refreshes, routine maintenance. No escalation. No executive dashboard. No engineer pulled off planned work.

If it can, it escalates with proof. Not just a score. Actual evidence: here's the asset, here's the exploit path, here's the business impact.

Security stops managing "vulnerabilities" and starts addressing confirmed exploitable issues. The backlog shrinks because the problem space narrows to what genuinely threatens the business. Remediation happens faster because it's focused on real risk, and engineering hours spent on emergent remediation shrink by 60–80%. Leadership gets cleaner reporting because the numbers reflect actual exposure, not scan output.

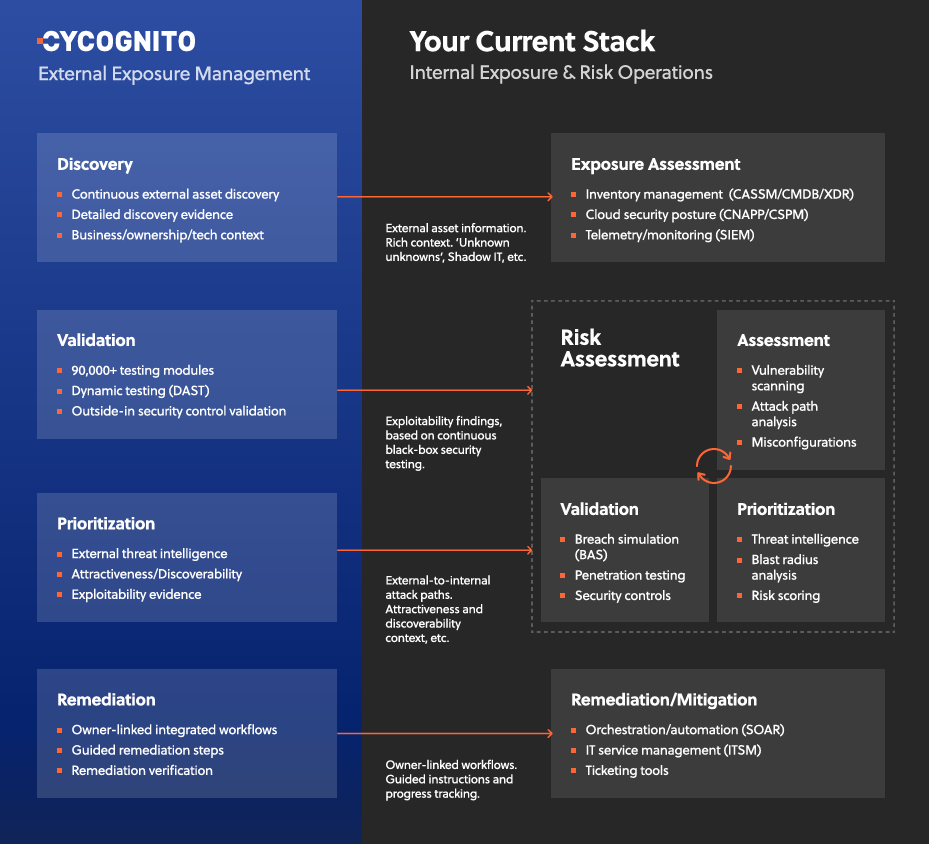

Continuous EEM and Validation with CyCognito

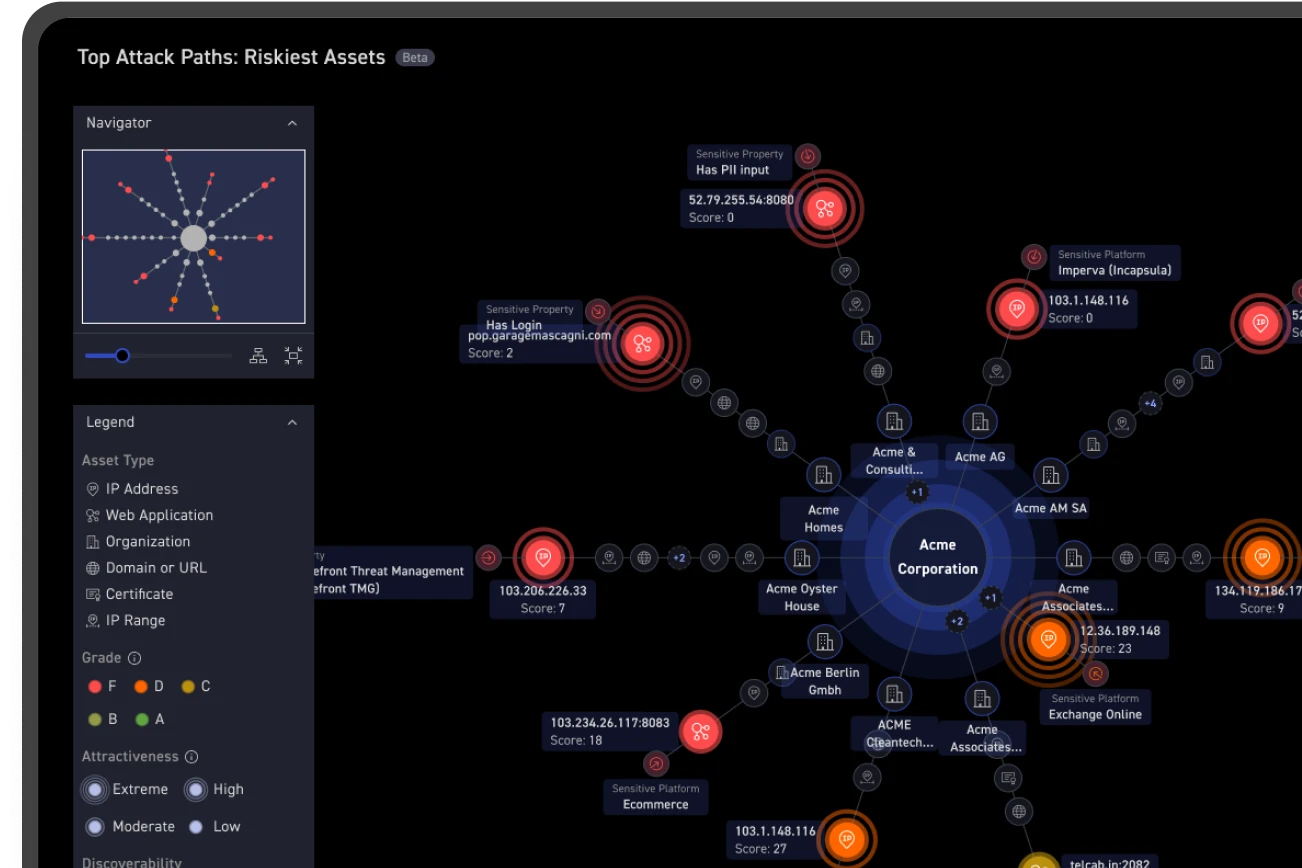

CyCognito delivers validated findings from the attacker's perspective. Every issue that gets flagged isn't just exploitable in theory, it's reachable from the outside, discoverable, and attractive enough to pursue. The platform combines active security testing with threat intelligence, attack path analysis, and attacker-perspective signals like discoverability and attractiveness.

Most organizations validate in narrow slices, an annual pentest, a red team exercise. These provide depth but leave massive gaps. Between testing windows, new assets spin up, configurations drift, and none of it gets validated. CyCognito closes that gap by running validation continuously across the full external attack surface.

The platform uses seedless discovery to find externally reachable assets across on-prem, cloud, SaaS, and third-party environments, including ones that never made it onto an inventory. It then performs more than 90,000 security tests (inc. continuous DAST) across 30+ categories to validate issues like:

- Data exposure

- Authentication bypass

- Abandoned assets

- OWASP threats

- Security controls (e.g., encryption or WAF coverage gaps)

and more

It does all of that on a recurring cadence, mirroring real attacker behavior, avoiding production disruption, and adapting as your environment changes.

When something is confirmed exploitable, CyCognito provides the technical receipts: detailed evidence of the exploit path, the affected asset, its business context and organizational ownership, and clear remediation steps.

The Math of Turning Risk into Business Value

While many organizations are adopting the Continuous Threat Exposure Management (CTEM) framework, the actual value of that framework depends on the speed and accuracy of the underlying technology. CyCognito transforms these theoretical processes into measurable business outcomes by automating the discovery, attribution, and testing of the external attack surface.

By bringing a true attacker’s perspective to the environment, CyCognito provides validation that proves exactly how an exposure could be exploited. This shift from manual triage to automated, evidence-based validation significantly reduces the operational "tax" on both security and development teams.

Reclaiming the Analyst’s Day

Because the attack surface is constantly shifting, the burden of analyzing new assets as they appear is significant. Manually assessing a single new asset (identifying its purpose, ownership, and risk) typically takes an analyst 5 to 10 hours. Validation changes this math by automating the discovery and testing process, reducing that time to just 5 to 30 minutes per asset.

To illustrate this, consider a scenario where 20 new assets are discovered, each carrying roughly 20 potential security issues and the effort those will demand:

- Manual assessment: 20 assets × 10 hours = 200 hours.

- After validation: 20 assets × 30 minutes = 10 hours.

- End result: You have reclaimed 190 hours of high-level labor for every 20 assets identified, allowing analysts to focus on strategy rather than triage.

Focusing R&D on Proven Risk

Once assets are assessed, the next challenge is the noise of the findings. Traditional scanners often flag 1% of all findings as "Critical" based on theoretical severity. Validation filters this noise by flagging only proven, exploitable issues, typically reducing the volume of critical findings to just 0.1%.

Using our 20-asset scenario (assuming 20 issues per asset), this is how this how validated findings make a difference:

- Initial prioritization: 400 total findings × 1% Critical = 4 "Critical" tickets.

- After validation: 400 total findings × 0.1% Critical = 0.4 (effectively 0 or 1) tickets.

- End result: A 90% reduction in noise, allowing developers to spend their precious time building features rather than chasing vulnerabilities that pose no real-world threat.

Reducing Incident Response Costs

Beyond daily productivity, validation directly impacts the bottom line by preventing the most expensive days a company can face. Industry data shows that 30% of incident response engagements originate from the exploitation of public-facing applications. Closing these breaches before an attacker finds them is the most effective way to avoid unbudgeted costs.

If an IR team typically manages 25 incidents per year, According to recent reports, the average cost of a data breach can reach $4.4M when factoring in forensics, downtime, and legal implications. By using validation to find and close these specific entry points first, the math is simple: the business saves millions by preventing the very incidents that were most likely to happen.

Making It Work

The permission to ignore is not a shortcut; it is the operational logic that allows the CTEM framework to function as a unified, interconnected system. When these phases are treated as isolated silos, CTEM becomes just another way to organize an overwhelming backlog. When they are truly integrated, security becomes what it was always supposed to be: targeted, efficient, and credible.

Validation acts as the connective tissue in this cycle. It ensures that the insights from Discovery and Prioritization are hardened into high-fidelity intelligence before they ever reach the Mobilization phase. This prevents the "remediation bottleneck" by ensuring that only confirmed, reachable risks trigger a call to action.

CyCognito makes this interconnected cycle practical. Instead of validating a small sample once a year, security teams benefit from a continuous loop of proof across their full external attack surface. This ensures that every stage of the framework is fueled by real-world evidence, allowing the organization to move with confidence from discovery to resolution.

Want to see what this looks like in your environment? Schedule time with a CyCognito expert to walk through your external attack surface and discuss how CTEM can work for your organization.