What Is a Threat Hunting Framework?

A threat hunting framework is a structured approach that organizations use to proactively search for and identify malicious cyber activity that may have bypassed existing security controls. It provides a repeatable process for security teams to investigate potential threats, analyze evidence, and respond to security incidents, ultimately improving an organization’s overall security posture.

Benefits of using a threat hunting framework include:

- Improved threat detection: Frameworks help security teams identify threats that might otherwise go undetected by traditional security tools.

- Enhanced security posture: Threat hunting helps organizations identify vulnerabilities and weaknesses in their security defenses, allowing them to strengthen their overall security posture.

- Increased threat intelligence: Hypothesis driven hunting process can generate valuable threat intelligence that can be used to improve security measures and incident response plans.

Popular threat hunting frameworks:

- MITRE ATT&CK Framework: While not a dedicated threat hunting framework, ATT&CK provides a knowledge base of adversary tactics and techniques that can be used to inform and guide threat hunting efforts.

- Open Threat Hunting Framework (OTHF): A open-source project focused on standardizing threat hunting activities through reusable detection logic.

- Targeted Hunting Integrating Threat Intelligence (TaHiTI): Developed by Dutch banks, TaHiTI combines threat intelligence with hunting methodologies.

- PEAK Threat Hunting Framework: A more modern framework from Splunk, designed to be practical, vendor-agnostic, and customizable.

Key stages in a threat hunting framework:

- Define a clear hypothesis: Base your hunt on specific goals informed by threat intelligence, known vulnerabilities, or recent anomalies.

- Collect and prepare data: Pull relevant information from logs, network traffic, endpoints, and other sources, then normalize it for analysis.

- Analyze for indicators: Examine the data to uncover potential IOCs, behavioral patterns, or anomalies suggestive of malicious activity.

- Investigate findings: Validate suspicious activity through deeper inspection to determine whether it represents a true threat or false positive.

- Take action: If a threat is confirmed, respond swiftly with containment, eradication, and recovery steps to minimize impact.

- Capture lessons learned: Document your findings and use them to refine hunting processes, improve defenses, and guide future hunts.

Benefits of Using a Threat Hunting Framework

Using a structured threat hunting approach provides several key advantages for security teams:

- Improved detection of hidden threats: Frameworks enable analysts to uncover threats that bypass traditional security tools by encouraging hypothesis-driven investigation and contextual data analysis.

- Consistency across investigations: Standardized procedures reduce variability in hunts, ensuring all team members follow best practices and produce comparable results.

- Efficient use of resources: Frameworks help prioritize efforts based on threat intelligence and risk, avoiding wasted time on low-impact anomalies.

- Faster incident response: By identifying indicators early, cyber threat hunters can contain and mitigate threats before they escalate into full-blown incidents.

- Documentation and knowledge sharing: Structured approaches facilitate thorough documentation of past hunts, making it easier to share insights and improve team performance over time.

- Continuous improvement: Lessons learned from each hunt are fed back into the framework, enhancing detection capabilities and refining techniques.

- Enhanced collaboration: Defined roles and clear processes support coordination between hunters, analysts, and response teams.

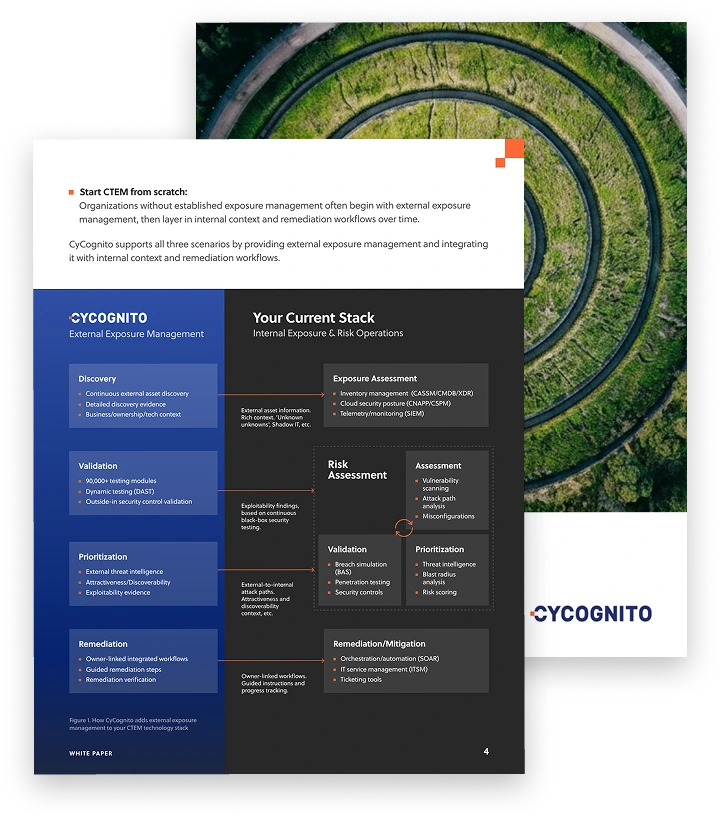

Operationalizing CTEM Through External Exposure Management

CTEM breaks when it turns into vulnerability chasing. Too many issues, weak proof, and constant escalation…

This whitepaper offers a practical starting point for operationalizing CTEM, covering what to measure, where to start, and what “good” looks like across the core steps.

Key Threat Hunting Frameworks

1. MITRE ATT&CK

MITRE ATT&CK is a globally recognized framework that categorizes adversary tactics, techniques, and procedures (TTPs) based on real-world observations. It provides security teams with a continually updated knowledge base of attacker behaviors that helps structure hunting activities. Analysts use ATT&CK to hypothesize, map, and detect adversary actions, aligning internal detection efforts with known techniques.

By leveraging ATT&CK, organizations can identify security gaps and prioritize defenses against relevant TTPs. The framework supports a threat-informed defense strategy, ensuring hunts are mapped to meaningful attacker behavior rather than theoretical threats. Its extensive public documentation and community contributions have made it an industry standard for combining detection, response, and threat intelligence.

2. Open Threat Hunting Framework (OTHF)

The Open Threat Hunting Framework (OTHF) is an open-source project focused on standardizing threat hunting activities through reusable detection logic and structured methodologies. OTHF emphasizes collaboration across the cybersecurity community, sharing detection rules, hunting playbooks, and data models that teams can adapt to their environments. This framework simplifies the process of developing and updating threat hunts.

OTHF enhances structured threat hunting efficiency by offering a centralized repository for actionable knowledge and detection content. Its modular approach allows organizations to integrate detection rules with various security tools and environments. By using OTHF, teams can accelerate the adoption of advanced threat hunting practices and align their efforts with industry best practices contributed by practitioners worldwide.

3. Targeted Hunting Integrating Threat Intelligence (TaHITI)

TaHITI is a modular threat hunting framework that tightly integrates threat intelligence into the hunting lifecycle. It guides teams in forming hypotheses directly from relevant threat intelligence, ensuring hunts are grounded in real-world, actionable insights. This integration enables analysts to focus investigations on likely risks within their organizational context.

The modular design allows teams to adjust and expand TaHITI according to evolving threat intelligence and organizational needs. It encourages the documentation of each hunt, sharing feedback across teams and improving efficiency over time. TaHITI stands out by tightly coupling threat intelligence feeds with the hands-on hunting process, closing the loop between external threat data and internal detection capabilities.

4. Prepare, Execute and Act with Knowledge (PEAK)

PEAK is a threat hunting methodology developed by Microsoft to provide a phased, iterative approach for security teams. It breaks hunts into three distinct phases: Prepare, Execute, and Act with Knowledge. The ‘Prepare’ phase focuses on hypothesis development and planning, ‘Execute’ drives the investigation based on set goals, and ‘Act with Knowledge’ covers response and lessons learned from each hunt.

PEAK’s strength lies in its systematic cycle, which makes continuous improvement integral to the process. By structuring hunts around stages, PEAK ensures no critical steps are missed and that findings directly inform future planning. Its design supports organizations at all threat hunting maturity levels and provides clear direction.

Tips from the Expert

Dima Potekhin, CTO and Co-Founder of CyCognito, is an expert in mass-scale data analysis and security. He is an autodidact who has been coding since the age of nine and holds four patents that include processes for large content delivery networks (CDNs) and internet-scale infrastructure.

In my experience, here are tips that can help you better operationalize and maximize value from threat hunting capabilities:

- Integrate asset exposure scoring from EASM directly into hunt scoping decisions: Don’t treat all external assets equally during hunt planning. Use EASM-derived exposure risk scores (based on vulnerability, misconfiguration, and asset criticality) to dynamically prioritize which internet-facing assets and related internal systems become hunt targets.

- Introduce cross-framework TTP mapping dashboards: If you’re using multiple frameworks (e.g., PEAK + ATT&CK + OTHF), build a centralized dashboard that shows which ATT&CK TTPs each hunt covers and which remain untested. This prevents duplication across frameworks and helps identify coverage blind spots at the TTP level.

- Use hunt retrospectives to tune telemetry collection scope for future hunts: After every hunt, assess which data sources yielded the most signal vs. noise. Use this feedback to fine-tune your log collection and endpoint telemetry scope, especially for resource-heavy datasets like DNS logs or process telemetry.

- Align threat hunting frameworks with incident response kill chain mapping: During your “Respond and Remediate” phase, classify each validated threat according to both MITRE ATT&CK and the Cyber Kill Chain phase where detection failed. This gives you two lenses—tactical and strategic—for improving your hunt logic and detection engineering.

- Run periodic adversary emulation hunts on unmonitored or low-visibility data sources: Force yourself to hunt in blind spots: schedule quarterly emulation exercises where you predict adversary behavior specifically in areas where telemetry is thin (e.g., unmanaged endpoints, legacy systems, SaaS apps without direct log feeds).

Essential Threat Hunting Techniques

Successful threat hunting relies on a variety of techniques to discover new threats that may not trigger standard security alerts. These techniques can be used independently or combined, depending on the hypothesis and available data.

Hypothesis-Driven Hunting

Hypothesis-driven hunting begins with a specific assumption about how a potential threat might be operating in the environment. These hypotheses are typically informed by threat intelligence reports, attacker TTPs, or recent attack trends. For example, a hunter might posit: “An attacker is using PowerShell to bypass detection on critical servers.” The hunt then focuses on collecting and analyzing relevant telemetry—such as command-line execution logs or PowerShell script block logs—to test this assumption.

This method provides structure and purpose to threat hunts, reducing the likelihood of wasted effort. It requires a solid understanding of both attacker behavior and the organization’s infrastructure. Successful hunts often refine or evolve the original hypothesis, leading to a cycle of continuous learning and improved detection capabilities.

Anomaly Detection

Anomaly detection involves identifying deviations from established norms within the organization’s network, user behavior, or system performance. For example, an employee logging in from a new geographic location at an unusual time, or a server generating significantly more DNS queries than usual, could be flagged for further investigation.

Effective anomaly detection relies heavily on high-quality, time-series data and well-defined baselines of normal activity. It may involve statistical modeling or machine learning to automatically highlight anomalies. While powerful, this technique can generate high false positive rates if not carefully tuned, especially in dynamic environments with evolving baselines.

Behavioral Analytics

Behavioral analytics tracks how users and systems behave over time, identifying meaningful patterns that may indicate malicious activity. Instead of focusing on single events, it looks at the sequence and context of actions. For example, if a user typically accesses three systems per day but suddenly accesses twenty, that deviation may suggest credential misuse or lateral movement.

This technique excels in detecting insider threats, account compromise, and stealthy attackers using legitimate credentials. It often leverages machine learning models that build user or system profiles. Tools like UEBA (user and entity behavior analytics) can automate parts of this process, but expert interpretation remains essential for validation.

IoC (Indicator of Compromise) Searches

IoC-based hunting uses known threat artifacts such as malicious IPs, domains, URLs, file hashes, or registry keys to search through organizational data. These indicators are typically sourced from threat intelligence feeds, internal detections, or incident response cases.

While IoCs are most effective against known threats, their value diminishes as attackers rotate infrastructure or change payloads. IoC-based hunting is often used to quickly scope the impact of an ongoing campaign or to validate that previous infections are no longer active. Integrating IoC searches into SIEMs and EDR platforms can help automate and scale this process.

Threat Intelligence-Driven Hunting

This approach integrates threat intelligence directly into the hunting process, using reports, actor profiles, and observed TTPs to shape hypotheses and guide data collection. For instance, if threat intel indicates an APT group targeting a specific industry with custom malware, the hunt might focus on detecting unique behaviors associated with that malware.

Threat intelligence–driven hunting ensures relevance by aligning investigation efforts with likely and high-impact threats. It can be particularly effective when intelligence is contextualized to the organization’s assets, geographic footprint, and technology stack. Integration with tools like threat intel platforms (TIPs) allows for dynamic updating of hunt logic as new intelligence becomes available.

Frequency Analysis

Frequency analysis looks for unusual volumes or absence of expected events. Analysts compare how often specific actions occur—such as process executions, domain resolutions, or file accesses—against typical behavior. A sudden spike in PowerShell use, or rare access to a critical file server, may warrant deeper investigation.

This method can reveal stealthy or low-volume attacks that evade signature-based detection. It’s especially useful in identifying rare or anomalous values in large datasets. Frequency analysis often works best when combined with visualizations and statistical baselines to quickly surface outliers.

Endpoint Telemetry Analysis

Endpoint telemetry analysis dives into data collected from endpoints, including process execution, file changes, registry modifications, network connections, and user activity. This detailed telemetry allows hunters to reconstruct events at the host level, identify persistence mechanisms, or detect malware behaviors like DLL injection or credential dumping.

Analyzing endpoint data provides rich context but also requires filtering out normal system noise. It’s especially powerful when EDR solutions are deployed broadly and integrated with SIEMs for correlation. This technique is critical in detecting advanced persistent threats (APTs) and uncovering lateral movement paths.

Network Traffic Analysis

This technique analyzes network flow data or full packet captures to detect suspicious communication patterns. Hunters look for command-and-control (C2) activity, exfiltration attempts, and lateral movement by analyzing protocol usage, data transfer volumes, and timing patterns. Indicators might include beaconing behavior, encrypted traffic over non-standard ports, or connections to known malicious IPs.

Network traffic analysis is vital in environments where endpoint visibility is limited, such as unmanaged devices or IoT systems. While full packet capture offers granular detail, it can be resource-intensive to store and analyze. Network metadata (e.g., NetFlow, Zeek logs) offers a scalable alternative for broader coverage.

Memory Analysis

Memory analysis inspects the contents of RAM on a running system to uncover evidence of in-memory-only threats, such as fileless malware, reflective DLL injections, or rootkits. It allows visibility into what was actively running—even if traces are erased from disk or logs. Common tools for this include Volatility and Rekall.

This approach is typically used in deep-dive investigations or post-exploitation scenarios. It requires strong forensic expertise and often manual effort to interpret memory dumps. While not practical for routine hunts, it is indispensable when facing sophisticated adversaries who avoid persistent artifacts.

Threat Emulation and Simulation

Threat emulation uses tools like MITRE Caldera, Atomic Red Team, or commercial red-teaming tools to simulate known adversary behaviors in a test or production environment. These simulated actions act as benchmarks, helping hunters validate that detection logic and telemetry sources are working as intended.

This technique closes the feedback loop between theory and practice. It helps identify visibility gaps, test the completeness of detection rules, and train teams using real-world scenarios. Regular emulation exercises improve readiness and help refine hunting methodologies based on observed response effectiveness.

Related content: Read our guide to threat hunting tools.

How to Choose Threat Hunting Frameworks

Assess Your Team’s Maturity and Capabilities

Selecting a threat hunting framework first requires understanding your team’s existing capabilities and threat hunting maturity level. More novice teams may need frameworks with prescriptive guidance and integrated resources, while more advanced teams can benefit from modular frameworks that allow extensive customization. Evaluating technical skills, available resources, and previous hunting experience is essential before making a choice.

A realistic assessment prevents overextension and ensures chosen frameworks align with your team’s ability to implement and sustain the associated processes. This stage helps identify areas that need upskilling or additional tooling before adopting more complex frameworks.

Align to Use Cases

Different frameworks excel in different use cases, such as endpoint-focused hunts, cloud infrastructure, or integrating threat intelligence. Before selecting a framework, organizations should map their most critical detection and response scenarios, including prevalent threat vectors and compliance requirements. Matching frameworks to these scenarios ensures relevance and maximizes impact.

Understanding your unique organizational risks and threat landscape guides the selection towards frameworks that address actual needs rather than theoretical capabilities. Aligning use cases allows teams to focus resources on approaches that deliver measurable improvements in detection and response effectiveness.

Evaluate Core Framework Components

A structured threat hunting framework should define all core components—hypothesis development, data collection, analysis, investigation, response, and feedback. Evaluate each framework’s thoroughness in covering these stages and its support for integration and automation. Effective frameworks also provide robust documentation and support continuous improvement.

Assess whether the framework offers guidance on tool integration, documentation standards, and team communication. Frameworks with modular, extensible components make it easier to adapt to organizational growth and evolving security requirements.

Fit Framework to Data and Tooling

Framework selection should account for your existing security tooling and data infrastructure. Some frameworks require advanced SIEM implementations or access to rich telemetry, while others function with more basic log sources. Compatibility ensures seamless integration, enabling teams to get started quickly without overhauling technology stacks.

Consider frameworks that allow scaling and customization with your evolving infrastructure. Support for your chosen tools and data architectures minimizes friction and helps get more value from existing security investments.

Threat Hunting Frameworks and EASM

External Attack Surface Management (EASM) plays a crucial role in modern threat hunting by expanding visibility into all internet-facing assets—both known and unknown. This includes misconfigured cloud services, forgotten subdomains, and shadow IT systems that are often overlooked in traditional asset inventories. EASM helps threat hunters uncover these exposures, which may serve as entry points for attackers.

By mapping the full external footprint, EASM allows hunters to focus investigations on the most exposed and high-risk areas. This intelligence feeds directly into hypothesis development, enabling more accurate, targeted hunts. Additionally, ongoing asset discovery supports proactive detection of unauthorized changes, such as the appearance of unapproved services or unexpected software versions.

EASM-derived insights also help validate indicators of compromise (IOCs) by correlating malicious activity with exposed assets. When used in conjunction with threat intelligence, EASM enhances contextual understanding and prioritization. Ultimately, EASM empowers security teams to shift from reactive monitoring to proactive, exposure-aware hunting that anticipates attacker behavior.

Automating Threat Hunting with CyCognito

CyCognito empowers threat hunting teams with unique external intelligence and context during the hypothesis and scoping phases. As an Attack Surface Management (ASM) platform, it automates the discovery, risk prioritization, and validation of internet-exposed assets—including shadow IT, cloud infrastructure, APIs, and third-party environments—without requiring internal access.

By continuously mapping the organization’s external attack surface, CyCognito enables threat hunters to:

- Prioritize based on real-world exposure: CyCognito applies attacker logic and business context to highlight the most vulnerable and likely-to-be-targeted assets.

- Refine hunt hypotheses: Exposure trends, asset risk scores, and vulnerability context help teams formulate focused, high-impact hypotheses.

- Reveal coverage gaps: Autonomous reconnaissance identifies unmanaged or unmonitored assets beyond the reach of traditional EDR/SIEM tools—ideal for targeting blind spots.

- Accelerate investigations: When anomalies are detected, CyCognito provides rich context on asset ownership, exposure history, and risk posture to support rapid triage and response.

Integrated with frameworks like MITRE ATT&CK CyCognito extends the “Prepare” and “Scope” phases beyond internal perimeters, serving as a powerful catalyst for proactive, exposure-driven threat hunting.