What Is Website Penetration Testing?

Website penetration testing is a security practice aimed at identifying and addressing vulnerabilities in web applications. By simulating cyberattacks, it evaluates the security posture of a website, revealing weaknesses that could be exploited by malicious actors. This process helps in uncovering security flaws and enhancing security measures. Regular penetration testing ensures that web applications are protected against evolving threats and regulatory compliance is maintained.

The process typically involves multiple stages, starting from reconnaissance and moving through active scanning, exploitation, and reporting. It requires specialized knowledge and tools to accurately simulate real-world attack scenarios. Engaging in penetration testing allows organizations to manage risks and fortify their defenses.

Benefits of Frequent Website PenetrationTesting

Regular website penetration testing is essential for maintaining a strong security posture in today’s threat landscape.

- Identify vulnerabilities before exploitation: Regular penetration testing helps detect and fix vulnerabilities before they are exploited by attackers. By simulating real-world attacks, organizations can identify potential weak points in their web applications, reducing the risk of unauthorized access or data breaches.

- Ensure regulatory compliance: Many industries require adherence to security standards like PCI-DSS, GDPR, and HIPAA. Regular testing helps organizations meet these compliance requirements, demonstrating a proactive approach to security and avoiding fines or penalties.

- Enhance security awareness and preparedness: Conducting regular penetration tests fosters a culture of security within the organization. It provides insights into current security weaknesses and raises awareness among developers, IT teams, and stakeholders.

- Cost-effective security management: Addressing vulnerabilities proactively through regular testing is often less costly than recovering from a cyberattack. By identifying and fixing issues early, organizations can avoid the significant financial losses associated with data breaches, including legal fees, recovery costs, and lost business.

- Optimize security investments: Penetration testing provides a clearer picture of security gaps, allowing organizations to prioritize resources on the most critical vulnerabilities. This targeted approach helps optimize security investments, ensuring that limited resources are allocated to areas with the highest impact on risk reduction.

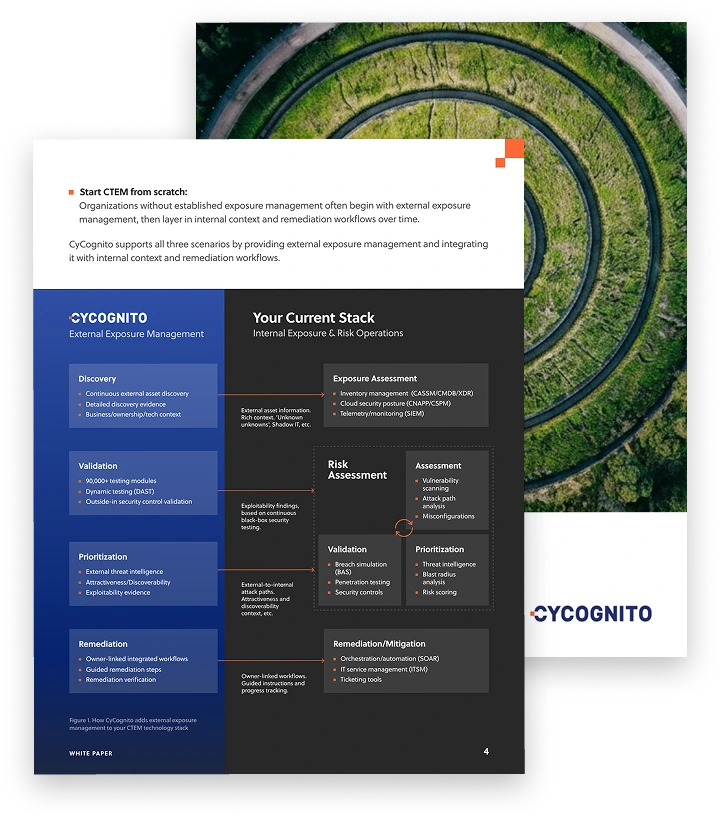

Operationalizing CTEM Through External Exposure Management

CTEM breaks when it turns into vulnerability chasing. Too many issues, weak proof, and constant escalation…

This whitepaper offers a practical starting point for operationalizing CTEM, covering what to measure, where to start, and what “good” looks like across the core steps.

Common Vulnerabilities Tested inWebsites

Here are some of the most common vulnerabilities discovered in penetration tests of web applications.

SQL Injection

SQL injection is a prevalent attack vector that exploits vulnerabilities in an application’s database query execution. Attackers insert malicious SQL code into input fields, manipulating the backend database. This can lead to unauthorized data access, modification, or even deletion. SQL injection vulnerabilities primarily arise from improper validation and handling of user input. To mitigate such risks, it’s crucial to sanitize inputs, use prepared statements, and apply least privilege principles in database access.

Cross-Site Scripting (XSS)

Cross-site scripting (XSS) is a security vulnerability that allows attackers to inject malicious scripts into web pages viewed by other users. This can lead to data theft, session hijacking, and the distribution of malware. XSS exists in three main types: stored, reflected, and DOM-based. Each occurs when web applications include untrusted data in a new web page without proper validation or escaping. Effective defenses involve sanitizing inputs, applying content security policies, and using secure coding practices.

Cross-Site Request Forgery (CSRF)

Cross-site request forgery (CSRF) exploits a user’s active session with a web application to perform unauthorized actions. By tricking users into executing unwanted requests, attackers can compromise user data or perform actions without their knowledge. This often happens when a user unknowingly clicks a malicious link while logged into the target service. To prevent CSRF, applications can use anti-CSRF tokens, verify the origin of requests, and implement multi-factor authentication.

Broken Authentication and Session Management

Broken authentication and session management present critical security weaknesses. This vulnerability arises from improper implementation of authentication mechanisms, allowing attackers to gain unauthorized access. Weak password policies, predictable session tokens, and insufficient session expiration contribute to this risk. Protecting against these vulnerabilities necessitates enforcing strong password requirements, implementing two-factor authentication, and ensuring secure session management.

Security Misconfigurations

Security misconfigurations are one of the most prevalent vulnerabilities in web applications. These occur when a system or application is not adequately configured, resulting in redundant functionality or unnecessary exposure. Common misconfigurations include default settings, unnecessary features, and incomplete configurations. Regularly reviewing and updating system settings, disabling unused services, and applying the principle of least privilege are essential steps to prevent these vulnerabilities.

Insecure Direct Object References

Insecure direct object references (IDOR) occur when applications expose internal objects through user inputs. This vulnerability allows attackers to bypass authorization mechanisms and access sensitive information. IDOR typically results from insufficient access controls and improper verification of user permissions. By implementing access control checks and using indirect object references, organizations can prevent unauthorized access to sensitive data.

Sensitive Data Exposure

Sensitive data exposure is a significant concern in web security. It occurs when applications inadvertently expose sensitive information, such as credentials, personal information, or financial data. This can happen through weak encryption, insufficient access controls, or improper data storage methods. Protecting sensitive data involves encrypting data in transit and at rest, implementing strong access controls, and following secure coding standards.

Related content: Read our guide to vulnerability assessment.

Tips from the Expert

Dima Potekhin, CTO and Co-Founder of CyCognito, is an expert in mass-scale data analysis and security. He is an autodidact who has been coding since the age of nine and holds four patents that include processes for large content delivery networks (CDNs) and internet-scale infrastructure.

In my experience, here are tips that can help you better conduct website penetration testing:

- Leverage subdomain enumeration for hidden assets: Use tools like Amass or Sublist3r to identify subdomains that might host web applications not directly linked from the main site. These often contain forgotten or less-secured applications that could provide an easy entry point.

- Focus on client-side security headers: Beyond just checking for Content Security Policy (CSP), ensure the site has HTTP Strict Transport Security (HSTS), X-Content-Type-Options, X-Frame-Options, and Referrer-Policy headers set. These reduce attack vectors like clickjacking, MIME-type attacks, and MITM (Man-in-the-Middle) attacks.

- Test for business logic flaws beyond OWASP Top 10: Most penetration tests focus on common vulnerabilities like XSS or SQL injection. However, look for flaws in business logic, such as unauthorized discounts, manipulation of checkout processes, or bypass of two-step processes, which attackers can exploit without triggering alarms.

- Analyze HTTP request and response behavior under load: Perform testing during high-traffic periods or simulate heavy traffic using stress-testing tools like Apache JMeter. Sometimes vulnerabilities (e.g., race conditions or improper session handling) only emerge under specific load conditions.

- Review third-party integrations and dependencies critically: Test integrations like payment gateways, chat widgets, or analytics services. These often include external scripts that might have vulnerabilities or use outdated versions with known security flaws.

Pen Testing Approaches for DifferentScenarios

Network-Based Penetration Testing

Network-based website penetration testing focuses on assessing the security of the network infrastructure supporting the website, including web servers, firewalls, and load balancers. This testing aims to identify vulnerabilities within the network that could compromise the website, such as open ports, outdated software, or misconfigured services that could be leveraged by attackers.

The process begins with reconnaissance to identify network assets associated with the website. Penetration testers then simulate attacks to test for weaknesses in network defenses, such as firewall rules that might allow unauthorized access or unsecured communication channels that could be intercepted.

Application Logic Testing

Application logic testing for websites targets vulnerabilities in how the website’s functions and workflows are designed, particularly looking for flaws in business logic that attackers could exploit. This testing evaluates scenarios such as account management processes, transaction flows, and permissions checks to uncover vulnerabilities that may not involve traditional coding flaws but could allow manipulation of the website’s intended behavior.

For example, testers might simulate actions such as bypassing multi-step processes, exploiting shopping cart logic, or manipulating discount codes. By identifying and fixing these types of issues, application logic testing ensures that the website operates as intended and prevents attackers from exploiting business logic flaws to gain unauthorized access or misuse the website’s functionality.

API-Specific Penetration Testing

API-specific penetration testing for websites evaluates the security of web APIs that interact with the website, which are often targeted by attackers due to the data and functionality they expose. This approach assesses API endpoints for issues like broken authentication, insufficient rate limiting, and excessive data exposure that could lead to data leakage or unauthorized access.

Testing involves checking access permissions, validating input handling, and ensuring secure authentication practices for each API endpoint. For example, testers look for vulnerabilities like broken object-level authorization, where users may be able to access data they shouldn’t, or rate limiting failures that could allow brute-force attacks. By securing APIs, organizations can protect backend systems and user data accessible via the website.

Client-Side Testing

Client-side website testing focuses on identifying vulnerabilities within the user-facing aspects of a website, particularly those that can impact users’ browsers and data. This testing approach includes looking for issues such as insecure cookies, weak content security policies (CSPs), and JavaScript vulnerabilities that could allow cross-site scripting (XSS) or other client-side attacks.

Penetration testers examine how the website manages data in the user’s browser, enforcing secure cookie attributes, and validating all client-side input. By identifying and addressing client-side vulnerabilities, this testing ensures that users interacting with the website are protected from attacks that might steal session information, hijack accounts, or execute unauthorized actions on their behalf.

Types of Penetration Testing for WebApplications

Black Box Testing

Black box testing simulates an external attack with no prior knowledge of the internal workings of the web application. Testers approach the system as attackers would, focusing on exposing vulnerabilities through trial and error. This method provides a realistic view of the potential external threats to the application. However, the lack of internal insights may also limit the depth of exploration and the identification of some complex issues.

The effectiveness of black box testing depends largely on the tester’s skill and the breadth of attack vectors explored. This approach is valuable in assessing how well an application stands against real-world attacks and identifying common vulnerabilities, such as SQL injections and CSRF. Combining black box tests with other methodologies can provide a well-rounded security assessment.

White Box Testing

White box testing, also known as clear or glass box testing, involves a thorough examination of the application’s internal structure and source code. Testers have full access to source code, enabling them to identify vulnerabilities that may not be visible in black box testing. This approach uncovers hidden bugs and logic errors that could lead to secure issues. White box tests are particularly useful for understanding how input is processed and identifying potential security risks within the code.

Due to its thorough nature, white box testing can be costly and time-consuming, requiring specialized expertise to analyze the application’s intricacies. It is beneficial in maintaining high security standards by ensuring code quality and secure practices are followed. While effective in identifying code-level vulnerabilities, it’s often used in conjunction with other testing types to ensure a complete security assessment.

Gray Box Testing

Gray box testing combines elements of both black and white box testing, giving testers partial knowledge of the application’s internals. This approach allows testers to focus on specific areas of concern while maintaining an external perspective. By doing so, gray box testing effectively identifies vulnerabilities in a comprehensive manner, making it a practical option for assessing both functionality and security of web applications.

Gray box testing is beneficial, offering a balanced focus on security and efficiency. Testers can prioritize based on known information, ensuring that critical aspects are thoroughly evaluated. This method is cost-effective and provides a realistic insight into potential security threats. It helps in identifying issues such as improper application configurations, broken access controls, and input validation errors.

Penetration Testing Methodology for WebApplications

Website penetration tests typically follow these steps:

1. Reconnaissance

Information gathering, also known as reconnaissance, is the first phase of web application penetration testing. During this phase, testers collect as much data as possible about the target web application. This includes identifying the technologies used, crawling the application structure, and mapping out potential entry points. Information can be gathered through both passive and active techniques.

Passive information gathering involves collecting publicly available data without interacting directly with the target, such as through search engines, domain records, or public databases. Active information gathering involves interacting with the target, such as by probing the application using tools like Nmap or Burp Suite to identify open ports, services, and vulnerabilities. The insights gained from this phase help testers plan their attacks and focus on the most vulnerable areas of the application.

2. Vulnerability Scanning

Once sufficient information has been collected, the next step is vulnerability scanning. During this phase, automated tools are used to scan the web application for known security weaknesses, such as outdated software, insecure configurations, and common vulnerabilities like SQL injection or XSS. Tools like OpenVAS, Nessus, or ZAP are typically employed for this task.

Vulnerability scanning helps identify low-hanging fruit and potential attack vectors that could be exploited. However, since automated scans can sometimes produce false positives or miss more complex vulnerabilities, the results are usually reviewed manually by the tester. This ensures that the findings are accurate and that the most critical vulnerabilities are prioritized for further exploitation.

3. Exploitation

In the exploitation phase, testers attempt to actively exploit the identified vulnerabilities to understand their real-world impact. This may involve injecting malicious code, bypassing authentication mechanisms, or escalating privileges. The goal is to determine how an attacker could use these weaknesses to compromise the system, steal data, or take control of the application.

Exploitation techniques vary based on the type of vulnerabilities discovered. For example, in the case of SQL injection, testers might attempt to extract sensitive data from the database. For broken authentication, they may try to hijack user sessions. This phase provides critical insights into the severity of the vulnerabilities and the potential damage they could cause if left unaddressed.

4. Post-Exploitation

Post-exploitation involves assessing the extent of access gained and the potential for further attacks after a vulnerability has been successfully exploited. This phase focuses on determining the persistence of the attack, lateral movement within the system, and the possible extraction of valuable data. Testers may explore whether they can create backdoors, escalate privileges further, or pivot to other systems connected to the compromised web application.

The goal of post-exploitation is to simulate the actions an attacker might take after gaining initial access. By doing so, testers can demonstrate the full impact of a breach and highlight areas where additional controls are needed to prevent further exploitation or data exfiltration.

5. Reporting and Remediation

After testing is completed, a comprehensive report is generated detailing the findings. This report includes an overview of the vulnerabilities discovered, the methods used to exploit them, the potential risks posed by these vulnerabilities, and recommendations for remediation. The report typically categorizes vulnerabilities based on their severity, allowing the organization to prioritize fixes.

Remediation involves applying patches, fixing code errors, enhancing configurations, and implementing stronger security controls. A follow-up penetration test is often conducted after remediation to verify that all vulnerabilities have been effectively addressed and that no new issues have been introduced.

Technology Used for Website PenetrationTesting

Several technologies and tools are employed to conduct thorough website penetration testing. These technologies fall into different categories based on the type of testing they support:

- Static application security testing (SAST): SAST tools analyze an application’s source code, bytecode, or binary code without executing it. This approach helps identify vulnerabilities such as insecure coding practices or improper input validation early in the development lifecycle.

- Dynamic application security testing (DAST): DAST tools simulate attacks on running applications, testing for vulnerabilities like SQL injection, cross-site scripting (XSS), and authentication flaws. Unlike SAST, DAST tools assess the application in a runtime environment.

- Software composition analysis (SCA): SCA tools focus on identifying security vulnerabilities in third-party components and libraries used within the application. These tools analyze the open-source dependencies for known vulnerabilities and suggest patches or updates.

- Interactive application security testing (IAST): IAST tools combine elements of SAST and DAST by testing applications in real-time as they run.

Web Application Security withCyCognito

CyCognito identifies web application security risks through scalable, continuous, and comprehensive active testing that ensures a fortified security posture for all external assets.

The CyCognito platform helps secure web applications by:

- Using payload-based active tests to provide complete visibility into any vulnerability, weakness, or risk in your attack surface.

- Going beyond traditional passive scanning methods and targeting vulnerabilities invisible to traditional port scanners.

- Employing dynamic application security testing (DAST) to effectively identify critical web application issues, including those listed in the OWASP Top 10 and web security testing guides.

- Eliminating gaps in testing coverage, uncovering risks, and reducing complexity and costs.

- Offering comprehensive visibility into any risks present in the attack surface, extending beyond the limitations of software-version based detection tools.

- Continuously testing all exposed assets and ensuring that security vulnerabilities are discovered quickly across the entire attack surface.

- Assessing complex issues like exposed web applications, default logins, vulnerable shared libraries, exposed sensitive data, and misconfigured cloud environments that can’t be evaluated by passive scanning.

CyCognito makes managing web application security simple by identifying and testing these assets automatically, continuously, and at scale using CyCognito’s enterprise-grade testing infrastructure.

Learn more about CyCognito Active Security Testing.