What Is the Common Vulnerability Scoring System (CVSS)?

The Common Vulnerability Scoring System (CVSS) is an open industry standard to assess the severity of computer system security vulnerabilities. It provides a quantitative model that organizations use to prioritize and manage vulnerabilities based on objective criteria. By assigning a numerical score to a vulnerability, CVSS enables consistent decision-making about remediation efforts across different environments and industries.

CVSS is maintained by the Forum of Incident Response and Security Teams (FIRST) and is widely adopted by security professionals, vulnerability databases, and software vendors. The system provides standardized base scores for vulnerabilities and allows for customization based on the specific context of an organization or technology stack, improving the relevance of risk assessments.

This is part of a series of articles about vulnerability management

Evolution of the CVSS Standard

CVSS has gone through multiple versions since its introduction, each refining how vulnerabilities are scored to reflect real-world conditions more accurately.

CVSS v1 was released in 2004, offering a basic structure for evaluating vulnerabilities. However, its limitations in flexibility and transparency led to limited adoption.

CVSS v2, published in 2007, improved on the original by introducing clearer metric definitions and expanding scoring dimensions into three groups: base, temporal, and environmental. This version gained broad industry support and became widely used in public vulnerability databases.

In 2015, CVSS v3.0 addressed issues in v2 by better capturing the characteristics and impact of modern vulnerabilities. It introduced new metrics such as “scope,” which reflects whether a vulnerability in one component can affect resources beyond its security authority.

CVSS v3.1, released in 2019, focused on clarifying metric definitions and usage guidance without changing the scoring formula.

CVSS v4.0, released in 2023, introduced supplemental metrics and an extended framework to accommodate varying use cases like IoT, safety-critical systems, and supply chain risks. The updated model aims to improve scoring precision, support automation, and enhance context-aware risk assessments.

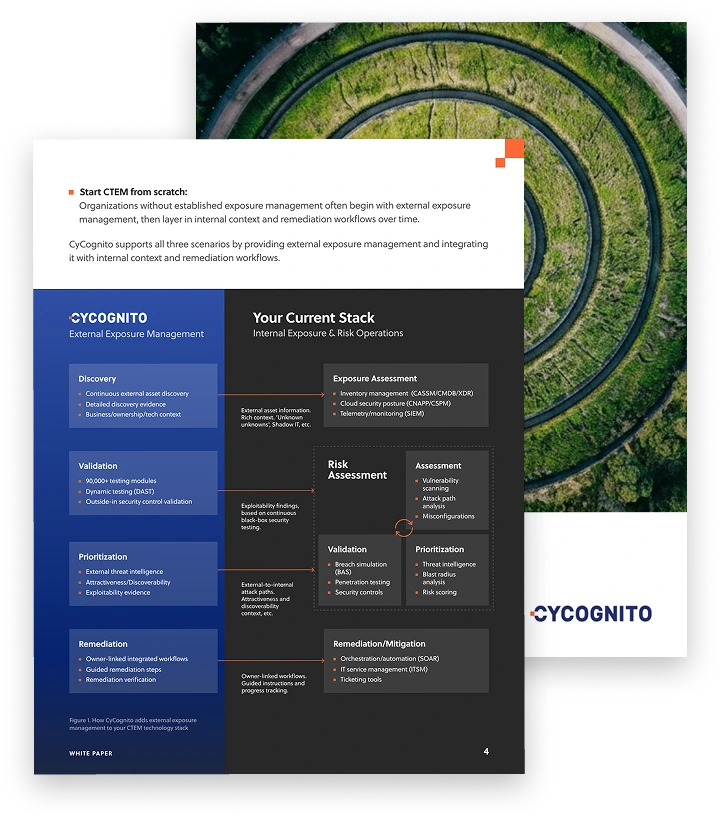

Operationalizing CTEM Through External Exposure Management

CTEM breaks when it turns into vulnerability chasing. Too many issues, weak proof, and constant escalation…

This whitepaper offers a practical starting point for operationalizing CTEM, covering what to measure, where to start, and what “good” looks like across the core steps.

Core Components of CVSS

CVSS scoring evaluates vulnerabilities through a set of metrics grouped into three categories:

- The base score reflects intrinsic characteristics of a vulnerability that are constant over time and across user environments.

- The temporal score factors in attributes that change over time such as exploit availability.

- The environmental score allows customization based on an organization’s unique impact or asset criticality.

A vulnerability’s final CVSS score is a composite of these metrics, ranging from 0.0 (no severity) to 10.0 (critical severity). This structure enables companies to use the standard base score for general prioritization, then tailor the overall severity based on their own temporal or environmental considerations, resulting in more effective vulnerability management.

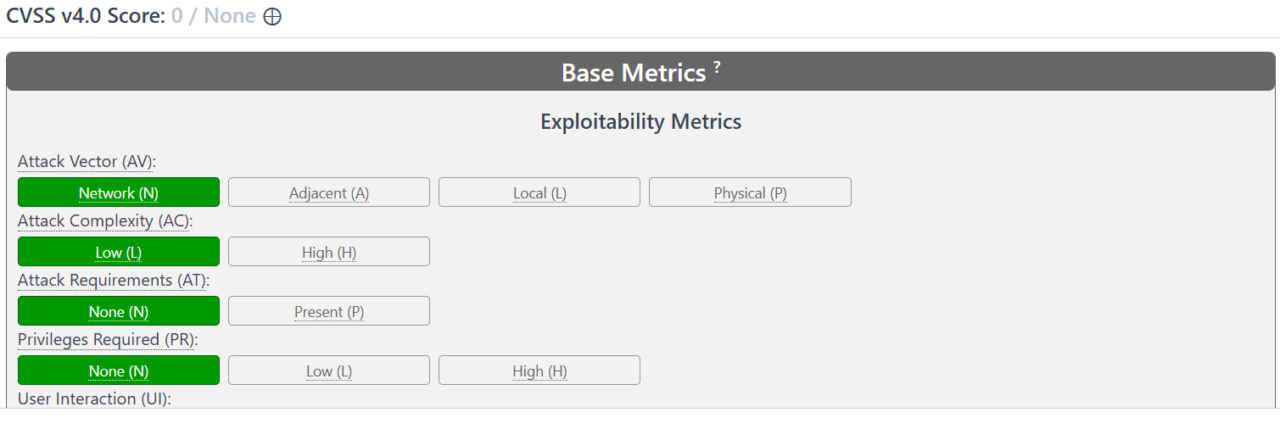

Try It Yourself with the CVSS 4.0 Calculator

The CVSS v4.0 Calculator allows users to generate scores by selecting values for each metric directly from the official scoring model. It includes detailed sections for Base, Temporal, Environmental, and Supplemental metrics, aligning with the structure used in the CVSS 4.0 specification.

The interface groups metrics into categories:

- Exploitability Metrics, such as attack vector, attack complexity, attack requirements, privileges required, and user interaction

- Vulnerable System Impact Metrics, covering confidentiality, integrity, and availability impacts

- Subsequent System Impact Metrics, which assess potential effects on other systems if exploitation extends beyond the original component

Users can also specify Supplemental Metrics to capture additional context, like whether the vulnerability is automatable, its safety impact, recovery complexity, and the urgency from the provider’s perspective.

The calculator supports Environmental Customization through modified base metrics and security requirement weights, enabling organizations to tailor scores based on their own risk environments. Interactive elements in the interface provide metric definitions and scoring guidance to aid accuracy.

By adjusting values in real time, users can immediately see how changes affect the final score, which is presented numerically (0.0–10.0) and as a qualitative severity rating (e.g., None, Low, High, Critical). This makes the calculator a practical tool for vulnerability analysts, security engineers, and risk assessors looking to apply CVSS scoring in operational contexts.

How Is CVSS Base Score Computed

1. Attack Vector

The attack vector (AV) metric reflects how remotely an attacker must be positioned to exploit a vulnerability. It is an indicator of exploitability, distinguishing between attacks that require physical proximity (physical), local system access (local), adjacent network access (adjacent network), or remote/network-based exploitation (network). Each level corresponds to a progressively larger attack surface, with a remote network-based attack generally scoring higher due to the larger threat landscape.

Attack vector directly influences risk prioritization because remotely exploitable vulnerabilities can be targeted by a broader range of actors, including automated scanning tools or opportunistic adversaries. When scoring, assess the realistic path an attacker would take, accounting for whether they must touch a peripheral, act from within the same subnet, or exploit accessible services over the internet.

2. Attack Complexity

Attack complexity (AC) measures the conditions beyond the attacker’s control that must be met to exploit a vulnerability. It distinguishes between “low” complexity, where exploits can be reliably executed without special circumstances, and “high” complexity, where successful exploitation depends on unpredictable or rare conditions like timing, system state, or the presence of specific configurations.

A vulnerability with high attack complexity receives a lower score, signaling that exploitation is less likely or requires advanced skills or luck. Properly evaluating attack complexity requires understanding both the technical prerequisites and operational dependencies necessary to trigger a vulnerability and determining if an attacker can reasonably expect to overcome those hurdles.

3. Privileges Required

Privileges required (PR) evaluates the minimum user privileges needed for successful exploitation. The options—none, low, and high—map to unauthenticated attackers, users with limited rights, or those requiring administrative or root access, respectively. Lower privilege requirements correspond to higher CVSS scores, as vulnerabilities exploitable by unauthenticated users are more dangerous.

Scoring PR accurately involves scrutinizing the system’s access control model and the specific context in which the vulnerability occurs. For example, if an attack can occur both before and after authentication due to a design flaw, the scenario demanding less privilege should be the basis for the metric, ensuring risk is not underestimated.

4. User Interaction

User interaction (UI) specifies whether successful exploitation depends on actions from users other than the attacker. If exploitation is possible without non-attacker participation, the metric is set to “none,” yielding a higher CVSS score. If user actions (like clicking a link or downloading a file) are required, the score is reduced, acknowledging that social engineering or user error is a factor.

Understanding the necessity of user involvement is essential for realistic risk evaluation. Vulnerabilities demanding user interaction often have lower exploit success rates, but some attacks leverage common user behaviors. Accurate assessment means closely analyzing the exploit chain and identifying exactly which steps hinge on user participation.

5. Scope

Scope refers to whether exploitation leads to an impact solely within the vulnerable component or affects other components beyond its intended security authority. “Unchanged” scope means the attack does not propagate beyond the initial vulnerable application or system. Conversely, a “changed” scope occurs if successful exploitation enables the attacker to compromise or affect security boundaries outside the original component.

The scope metric acknowledges modern complex environments where a single vulnerability can cascade into broader impacts, like escalating privileges across network boundaries or breaching sandboxed environments. Evaluating scope entails mapping the potential lateral movement or system compromise paths enabled by exploitation.

6. Confidentiality Impact

Confidentiality impact (C) represents the degree to which data confidentiality is affected by successful vulnerability exploitation. A “high” score is assigned if sensitive information can be completely compromised, a “low” score when only some information is exposed, and “none” if disclosure does not occur.

Defining the potential confidentiality loss precisely is essential, particularly in environments handling regulated or sensitive information. Quantifying this impact requires understanding both the value and the reach of the data affected, as well as the adversary’s ability to access that data via the exploit.

7. Integrity Impact

Integrity impact (I) assesses how much data accuracy, trustworthiness, or authenticity is compromised if the vulnerability is exploited. “High” means attackers can fully alter data or system state, potentially permitting unauthorized transactions or malicious payloads. “Low” reflects partial modification or tampering with limited potency, while “none” indicates no impact on integrity.

Correctly scoring integrity requires mapping the exploit’s consequences for business operations, system processes, and user confidence. Systems where integrity is paramount—such as financial or safety-critical systems—may amplify this metric’s relevance and downstream risk.

8. Availability Impact

Availability impact (A) measures the potential for an exploit to disrupt system or data accessibility. “High” impact means critical loss—services may stop responding entirely or become unusable, “Low” reflects reduced performance or intermittent outages, and “none” means the service remains unaffected.

For organizations where uptime is closely tied to business objectives, an accurate assessment of the availability metric helps set vulnerability remediation priorities. Quantifying both the scale and duration of potential disruptions is key to appropriately reflecting the availability risk posed by a vulnerability.

How Is CVSS Temporal Score Computed

9. Exploit Code Maturity

Exploit code maturity captures the current level of public exploit code availability for a vulnerability. High maturity indicates widely available, reliable exploit tools, while lower maturity applies when exploits are theoretical or require significant effort to develop. This metric dynamically adjusts a vulnerability score based on real-world risk as proof-of-concept or fully weaponized code emerges.

Monitoring exploit code maturity helps organizations prioritize patching and compensating controls. A previously theoretical risk may quickly escalate once exploit kits appear, driving up risk assessments even before incidents occur. Periodic review of sources like vulnerability databases and exploit marketplaces keeps maturity metrics updated.

10. Remediation Level

Remediation level reflects how easily and immediately a vulnerability can be addressed. The scale ranges from “official fix,” representing vendor patches or updates, down to “unavailable,” where no mitigation, workaround, or patch exists. This temporal measurement is critical for understanding short-term exposure windows and allocating resources more effectively.

As vulnerabilities evolve, remediation levels often change. Continuous tracking of vendor guidance, available patches, or recommended workarounds ensures accurate assessment. Lower remediation levels should prompt interim risk mitigation measures and heightened monitoring until a robust solution becomes available.

11. Report Confidence

Report confidence indicates the degree of confidence organizations should have in the existence and accuracy of vulnerability details. Metrics span from “confirmed” (verified by the vendor or reliable third parties) to “reasonable” (credible sources, but lacking independent validation) or “unknown” (insufficient evidence). Higher confidence strengthens the justification for assigning higher severity and prioritizing response.

Frequent re-evaluation of this metric is valuable, especially as bug reports mature from preliminary discoveries to well-documented, reproducible, and vendor-acknowledged issues. Clear evidence—including public advisories or cross-confirmation from multiple sources—should always drive confidence scoring updates.

How Is CVSS Environmental Score Computed

12. Confidentiality Requirement

Confidentiality requirement (CR) allows organizations to emphasize or de-emphasize confidentiality in their environment’s risk calculus. If protecting sensitive data is mission-critical, organizations can increase the confidentiality weight, causing relevant vulnerabilities to score higher, especially where data loss or exposure impacts compliance or reputation.

Unlike the base metric, CR tailors risk calculations to an organization’s specific data needs. It is recommended for regulated sectors such as healthcare or finance to always review and, where necessary, elevate this requirement to reflect the criticality of confidentiality in their context.

13. Integrity Requirement

Integrity requirement (IR) lets organizations specify how essential data integrity is to their business. Raising this requirement increases the severity of vulnerabilities that impact data accuracy or trustworthiness—important in industries like manufacturing, finance, or healthcare where data corruption has operational or safety consequences.

In environments where manipulation of information could influence strategic decisions or endanger lives, the IR factor should be set high. Accurate alignment of the IR metric with business priorities ensures vulnerability scores support effective risk and remediation decisions.

14. Availability Requirement

Availability requirement (AR) is used to increase or decrease the relative importance of system uptime in the vulnerability score calculation. A high AR is assigned where service outages or system downtime could significantly harm operations, as in telecommunications or critical-infrastructure organizations.

By setting AR properly, organizations can drive remediation urgency for vulnerabilities that threaten availability, such as denial-of-service exploits. This customization ensures resource allocation for patching aligns with the specific operational risks inherent in their business.

15. Modified Base Metrics and Their Roles

Modified base metrics allow organizations to substitute their own values for any base metric to reflect compensating controls, configurations, or unique asset characteristics. For example, a system behind multi-layered defenses may score lower in attack vector or privileges required than the generic default.

Using modified metrics helps organizations avoid both overestimating and underestimating risk. Proper application ensures CVSS scoring reflects real-world defense in depth, threat environments, and asset functionality, resulting in more actionable vulnerability remediation priorities.

Tips from the Expert

Rob Gurzeev, CEO and Co-Founder of CyCognito, has led the development of offensive security solutions for both the private sector and intelligence agencies.

In my experience, here are tips that can help you better apply and operationalize CVSS scoring for effective attack surface management:

- Incorporate business function impact mapping: CVSS doesn’t factor in how a vulnerability affects specific business processes. Augment scores by mapping vulnerabilities to business-critical functions, allowing prioritization based not just on severity, but on the operational disruption potential.

- Tag vulnerabilities with exploitation velocity: Track how quickly a vulnerability progresses from disclosure to weaponization in your environment or sector. Assign a “velocity” tag that helps prioritize fast-moving threats, especially those with low initial CVSS scores that escalate quickly in the wild.

- Use CVSS differentials for patch justification: When proposing patching or mitigation, show the delta between the base CVSS score and the score after environmental customization. This helps justify urgency to business stakeholders and ensures remediation aligns with your actual risk context.

- Score based on asset exposure, not theoretical access: Instead of scoring “attack vector” or “privileges required” based on the theoretical worst-case, use actual asset exposure from ASM tools (e.g., external-facing status, user access models) to reflect real exploitability in your environment.

- Apply decay logic to outdated vulnerabilities: For vulnerabilities that remain unpatched over time but are no longer actively exploited or relevant (e.g., end-of-life software on isolated systems), develop a decay model that reduces their priority, preventing alert fatigue from stale risks.

Technical Deep Dive: The CVSS Scoring Formula

CVSS calculates a numeric score using a defined formula that combines selected values from Base, Temporal, and Environmental metrics. Each metric has assigned weights and values that contribute to the total score.The Base Score is computed first, as it represents the intrinsic qualities of a vulnerability. It uses a combination of impact (confidentiality, integrity, availability) and exploitability (attack vector, attack complexity, privileges required, user interaction, scope). For CVSS v3.x and v4.0, the base formula integrates a modified impact subscore that accounts for the scope:

If Scope is Unchanged: Impact = 6.42 × Impact Subscore

If Scope is Changed: Impact = 7.52 × (Impact Subscore − 0.029) − 3.25 × (Impact Subscore − 0.02)^15

Where the Impact Subscore is derived from the combination of confidentiality, integrity, and availability impacts.

The Exploitability Subscore is calculated from:8.22 × Attack Vector × Attack Complexity × Privileges Required × User Interaction

The final Base Score is then a function of the impact and exploitability subscores, capped between 0 and 10, and rounded to one decimal place.

The Temporal Score is derived by multiplying the Base Score by:Exploit Code Maturity × Remediation Level × Report Confidence

Finally, the Environmental adjusts the Temporal Score by factoring in Confidentiality, Integrity, and Availability Requirements, as well as any Modified Base Metrics.

CVSS vs. CVE

CVSS and CVE serve different but complementary roles in vulnerability management. The Common Vulnerabilities and Exposures (CVE) system provides unique identifiers and descriptive records for publicly known information security vulnerabilities. CVSS, on the other hand, offers a formalized scoring mechanism that quantifies the severity of each CVE-identified vulnerability.

Organizations use CVEs to track vulnerabilities and CVSS scores to measure and prioritize them. A CVE entry becomes actionable when paired with a CVSS score, as this provides both a catalog reference and an objective severity assessment for patching, mitigation, and reporting workflows.

Critiques and Limitations of CVSS

Despite its widespread adoption, CVSS has several notable limitations that affect its usefulness in real environments.

- Scores used without context: CVSS base scores are often treated as final severity. Ignoring temporal or environmental metrics can lead to unnecessary focus on low-impact issues or missed attention on critical vulnerabilities in specific systems.

- Subjective metric interpretation: Analysts may interpret inputs such as privileges required or user interaction differently. This produces inconsistent scoring across teams and reduces comparability.

- No handling of vulnerability chaining: CVSS evaluates each vulnerability separately. When several low-severity issues combine to form a high-impact attack path, the system does not reflect the combined severity.

- Manual updates to temporal factors: Temporal metrics require ongoing manual changes as exploitability evolves. This causes delays in representing actual risk when new exploits appear quickly.

- No link to asset value or threat intelligence: Scores do not account for the importance of the affected system or current threat information. High scores on low-value systems may consume resources, while low scores on critical systems may obscure significant risk.

These limitations reinforce the need to pair CVSS with asset context, threat data, and analysis of attack paths for more accurate prioritization.

Best Practices for Accurate CVSS Scoring

1. Validate Impact Using Reproducible Evidence

Reproducible evidence supports the accuracy of impact assessments for each CVSS metric. Always confirm vulnerability effects—such as data leakage or privilege escalation—through hands-on testing or validated lab results. This approach minimizes speculation, ensuring the CVSS score reflects the vulnerability’s actual severity rather than hypothetical risks.

If direct testing isn’t possible, gather corroborating reports from trusted third parties, vendors, or established vulnerability repositories. Linking CVSS scoring to concrete demonstration of impact helps maintain consistency, transparency, and justifies remediation prioritization to stakeholders.

2. Document All Assumptions Explicitly

Transparency in assumptions underlying each CVSS metric ensures that scoring can be reviewed, reproduced, and adjusted as more information arises. Clearly document all preconditions, environmental factors, and subjective choices—such as default configurations or privilege expectations—when creating or modifying a CVSS score.

This practice is especially valuable in collaborative settings or during audits, where clear documentation eliminates ambiguity and speeds up consensus. Recording assumptions allows organizations to quickly reassess scores when systems, configurations, or threat models evolve, promoting continuous alignment with real-world risk.

3. Apply Environmental Metrics Consistently

Consistent use of environmental metrics tailors CVSS scoring to your organization’s unique risk appetite, asset value, and regulatory landscape. Regularly review business and data priorities to ensure confidentiality, integrity, and availability requirements accurately reflect operational needs. Standardizing this process across teams and time periods minimizes scoring drift.

Document the rationale for any modifications to base or requirement values. This ensures that environmental adjustments remain traceable and reproducible, providing a robust foundation for internal discussions and external compliance or reporting obligations.

4. Reassess Scores as Exploitability Changes

The threat landscape is dynamic—exploit code maturity and remediation options change rapidly for many vulnerabilities. Routinely review and update CVSS scores to reflect new information about public exploits, available mitigations, or changes in likely attacker tactics. Failing to update temporal metrics can leave organizations with outdated risk assessments and misaligned vulnerability management priorities.

Change tracking mechanisms, automated monitoring, and integration with threat intelligence feeds help maintain a timely and accurate reflection of risk in your CVSS scoring practices. This vigilance supports prompt incident response and risk mitigation decisions.

5. Use CVSS Alongside Threat Intelligence Feeds

While CVSS provides a structured, repeatable approach to scoring vulnerabilities, augmenting these scores with real-time threat intelligence leads to better risk management outcomes. Threat feeds supply context—such as observed attack campaigns, adversary tactics, and industry-specific targeting—that CVSS alone cannot capture. By integrating both sources, organizations develop a more complete view of their actual exposure.

It’s important to realize that not every high-severity finding represents real risk. A vulnerability can look critical on paper, but still be unreachable in practice. Continuous outside-in testing checks whether an attacker can actually exploit an exposed system, not just whether a weakness exists.

For example, a web application may have a severe vulnerability, but if a security control like a web application firewall blocks attacks by default, exploitation may not be possible. Active testing confirms what can truly be accessed and abused from the outside, helping teams focus on issues that create real business risk rather than theoretical ones.

Beyond CVSS with CyCognito

CVSS assigns severity scores to vulnerabilities. But a high score does not confirm that an asset is externally reachable, that a vulnerability is exploitable in practice, or that it creates a meaningful attack path.

CyCognito operates beyond CVSS by grounding prioritization in real attacker reachability and exploitability. If you are already using CVSS to rank vulnerabilities, CyCognito shifts the focus from numerical severity to validated external exposure by:

- Enriching risk scoring with threat intelligence plus proprietary reachability and asset-attractiveness signals

- Identifying exposure conditions beyond CVEs, including misconfigurations and security control gaps

- Confirming which vulnerabilities are actually exploitable from an outside-in perspective through active security testing

- Prioritizing based on real attack paths, not isolated scores, and correlating with internal and cloud attack surface tools through integrations

By applying continuous external discovery and exploitability testing, CyCognito moves security teams from static scoring to exposure-driven decision making. CVSS remains a reference point, but remediation is driven by what attackers can truly reach and abuse.If you want to see how your CVSS findings look when evaluated through an attacker’s lens, request a 1:1 demo.